I had written this back in 2010 to a magazine, Virtual Intelligence Briefing.

I haven’t been able to find it, and people keep asking me for, “…how to start with VDI?”

I have not changed a word in this post from years gone by.

While versions have changed, as well as vendors that no longer exist, I believe that the logic applied herein is still valid.

Enjoy the Read!

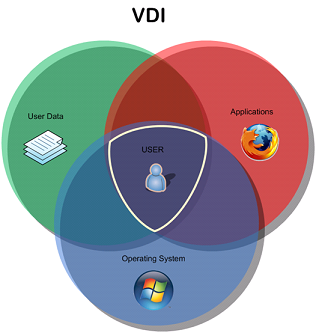

So much has been said about this over the past few years, generating new buzz, new questions, and new methodologies regarding how to deliver a desktop to a user. Delivering a desktop to a user is what we really need to do from an IT organization’s perspective, but just the desktop is not enough…the applications that the end user requires to accomplish his job is just as important, if not more important, than delivering the user a simple desktop.

I will be writing as if I were using VMware View 4.0.1, Windows XP, and Windows 7 here. Hopefully, I can present things to be considering here that are NOT specific to any of the vendors, but are major considerations when rolling out any VDI solution in your organization.

Over the past 4 years, I have been involved with Virtual Desktop Infrastructures (VDI from here in). When it first became a topic of discussion, lots of interest was there, but no true roadmap existed. Today, with many Connection Brokers available (VMware, Microsoft, Citrix, Leostream, PanoLogic, etc), many different types of solutions exist. The result I am seeking is to present a desktop, along with all the applications that a user requires, so that the end users’ experience is VERY similar, if not identical, to that of a traditional PC. While keeping the user base happy, finding a way for the desktop teams to transition from reactive desktop support to proactive desktop planning is where we start to see the value from the investment you will make in a VDI solution.

When server virtualization was introduced, we saw dramatic improvements in the efficiency of our server teams’ ability to support large numbers of servers in the virtual world. We took away the risk associated with hardware tied to a physical operating system when virtualizing our servers. Now we look to realize the same benefits as we virtualize our desktops…increasing the ratio of administrators to operating systems they support as well as removing the risk of hardware tied to a specific operating system. This is the one variable that can be mitigated by running virtually, and I have seen the benefits time and time again over the past 10 years for the server infrastructure, and has become a reality for the desktops over the past 4 years as the technology has matured.

The End Result

When you take on your VDI project internally, you want an identical experience for the end users (their operating system that you support), more security and control over the data you are responsible for securing, an easier (or more proactive at least) method for managing the applications users need, and keeping the users’ identity consistent (the user’s profile from the Windows perspective). With thorough testing/Proof of Concept implementations, you will find you can deliver on these major requirements.

Acknowledging The Change In Desktop Teams’ Responsibilities

So here is a big change in the way the desktop team can work. It is a change because we start the transition from a mostly reactive desktop support model, to a proactive desktop support model. The changing of responsibility to manage, and update applications that end users require, maintaining the operating system that is the base of their experience, as well as maintaining the users’ profile is an enormous change in the way most desktop support teams work. It may seem that some of these items crossover into the server team’s responsibilities for some organizations, and that is very probably true. The transition of these responsibilities is inevitable. VERY similar to the way that traditional PBX systems have transitioned into VoIP systems that are maintained by server teams, and also use the same network that data traffic uses on the network, we are starting to see the individual silos of IT start to merge, and the responsibilities of the traditional teams realign.

Choosing a VDI Desktop Operating System For End Users

The first of 3 major components of the VDI solution.This is always the easiest decision today. When considering the adoption of VDI for you infrastructure, let us change as few things as possible as we kick the tires on a new technology. Most organizations still use Windows XP as their primary desktop operating system, and understand all the little nuances that make it work (or break it, for that matter). So given that we all have a tremendous amount of experience with Windows XP, it is a very natural place to start with a desktop operating system to virtualize. Why would you try to learn a new operating system (anything other than Windows XP) while learning a new methodology to manage, deploy, and support the desktop operating system? Keep the variables in the Proof Of Concept to a minimum, so you can realize success.

Windows Vista…I don’t even consider it.

Windows 7 is a great next step for VDI. Once an organization grasps the new concepts of a VDI solution, it is much easier to then transition to Windows 7 as the underlying desktop operating system that is the base for the users we are supporting.

Managing The Users’ Data & Profile

The second of 3 major components of the VDI solution.The user data, in my opinion, is one of the more important parts of the entire solution. When managing desktops, we want to make sure that we keep our users happy, whether it is keeping the users’ favorites/bookmarks, macros, or wallpaper, we want to make sure we can keep that user base happy.

There are many tools available to manage user profiles. Windows, VMware, Citrix, and many other 3rd party products provide this functionality. I like to keep things simple…I consider the use of Windows Roaming Profiles (wait….don’t be mad…let me explain).

Roaming profiles is a solution we are all familiar with, and have had varying degrees of acceptance with. In the days of desktops being scattered between multiple locations, or just all over the corporate headquarters, we had to rely on the network to deliver the Roaming Profile to the user before they could complete their login to the desktop. This could take a lot of time, especially if you are logging in from a remote location over a slow WAN link.

Roaming profile corruption can easily be solved by enable snapshots (from the SAN or from the Windows OS itself), so you can restore a profile from the day or two prior. I have seen profiles corrupt, but that is a minor one off issue that does not surface all the time.

Now, with our desktops living in the datacenter, having high-speed links, it would no longer take a long time for users to log in to their desktops. This would be true in a centralized model as well, where a remote site is using thin clients to connect to the desktop (which would actually reside in the datacenter back at headquarters), and the file servers are actually VERY local to the servers housing the users’ roaming profiles…

Now, this is not to say that this will be how all VDI solutions would be rolled out. Many companies explore other software packages that can manage the users’ profiles. What I am stating here, is similar to what I stated about choosing a desktop to kick the tires with…start with something you are very familiar with, and introduce as few variables as you explore new technologies.

Understanding & Publishing The Applications In Your Environment

The third of 3 major components of the VDI solution.Application delivery is usually where you can invest the most time during the transition to a VDI solution. Many different technologies exist, and a hybrid solution can easily be what your organization requires to succeed.

Installing Applications Directly Into Each Virtual Machine

While this can still be done, and may be required by some applications, it is the traditional method of delivering applications to desktop operating systems. No learning curve here, but now considerations into how this would affect the delivery of desktops to users, and how much space is required to maintain the applications (now that we are using SAN disk, we need to be more conscious of how much space we consume, as SAN disk is somewhat expensive per GB). I like to acknowledge that this works, but I like to consider other options for delivery of the application stacks.

Delivering Applications via Terminal Services Published Applications

This is something that Windows Terminal Server teams and Citrix teams have done for many years. Put a shortcut on a user’s desktop, and that shortcut runs an application that is managed on a Terminal Server. This is a solution that still works wonderfully, because it keeps the actual application separated from the desktop operating system. I still recommend people consider this option for application delivery, even though it can raise the initial cost of the project (you will realize ROI as you maintain an application centrally at the Terminal Servers, rather than at the disparate desktops).

This is not a new technology, and has been in many IT organizations for the past decade. Why not consider it?

Managed Application Delivery

Any tool that we have used in the past can still be considered. I will just take one easy example, and that would be applications that are delivered via Group Policies (you can insert your tool name to replace Group Policies). While it is still possible to deliver applications in this fashion, we would still be tying the application to the desktop the user is working on. I like to try to transition away from this, though it is not always possible.

If I am continuing to use Group Policies, I may need to rethink the association of how these policies are tied into Active Directory. Do I still want to delver applications to computers object, or is it now more appropriate to deliver these applications to user objects? How will application installation affect the VDI images (and more importantly, how much space is consumed on the SAN)? How do I manage updates of these applications, and full upgrades as the versions inevitably increment year after year? Again, I would not throw out this type of technology, but I would not use this as the primary mechanism for delivery of applications if it can be avoided.

Virtualize Your Applications

Any tool that can wrap up an application into a single EXE file (the same way that Virtual Machines are really just VMDK and VMX files) sounds too good to be true. I’ll discuss ThinApp, and you can insert your tool of choice.

Virtualizing your application into an EXE file requires you to have an understanding of what your users need to do with an application, and then you capture that installation, and publish the EXE to the user. It might exist on a file share, and be accessed by many users at one time. It might be copied onto a USB stick, and shared with contractors or remote users. It might be copied to a laptop so a road warrior can always have those tools with him as he waits for his flight to board.

I like the idea of virtualizing the application. I want to ThinApp every application I can, so I have a strong bias here…but after you dive into how to maintain ThinApps for a wide user base, you may have the same opinion. Updating an application for end users is as simple as creating a new ThinApp package, and replacing the existing EXE file they are using…next time they start the application, the new version is running!

Summary Of Application Delivery

The ability to reconsider how you manage, deploy, update, and control the applications end users consume on your business computers is going to be a large part of your VDI Proof of Concept. Be ready to explore the combination of the varied methods available to deliver applications. I truly believe that by separating the application from the traditional dependencies that currently exist (when we install applications onto the desktop), a VDI solution can actually deliver on the promised ROI when you consider keeping applications up to date, running, and more importantly…keeping the user productive with those applications.

Selecting VDI Proof Of Concept Candidates

This is by far, one of the easiest choices for me, if I have control over the Proof of Concept testers. The IT team. These are the people who will understand any problems that they are running into, can articulate the problems as well as the resolutions, AND they would be the ones responsible for supporting users who will use a VDI solution. I can think of no reason why this should not be the first adopters of the VDI solution.

Thin Client or Repurposed Desktop PC’s?

Do you go out and buy a ton of Thin Clients, to replace the desktop PC’s? Possibly, but realistically not during the kicking of the tires or Proof of Concept. You will realistically be purchasing a few Thin Clients, perhaps even from various manufacturers, so you can find the ones that deliver the desktop experience your users require, while providing an easy and streamlined mechanism for managing the thin clients. (I would not want to replace the traditional desktop PC with a device that needs just as much management, just so I can get to the ‘cool’ new Virtual Desktop.) Repurposing PC’s? Fine for the initial testing and Proof of Concept (while testing Thin Client devices). Long term vision for me is to get rid of the desktops PCs, be it during the normal lifecycle replacement, or as I roll a VDI solution out to different groups in the organization.

This goes back to the facts that Thin Clients use less power, are easier to manage, and are just dumb devices that allow a user to access their desktop. Replacing a whole organization just for the sake of replacement is not necessarily a great idea, but it is a great end result that you can be shooting for as you roll out a VDI solution.

Selecting a Thin Client can be made easier when you work with a partner who can provide you an unbiased recommendation to help you align with your business needs (keeping the user base productive and happy). They have done this before, have some experience in the end user experience, and know your environment intimately enough to make some great suggestions.

Starting Your Proof Of Concept

So each organization’s needs will vary, but let us assume the best of situations exists for our Proof Of Concept.

Dedicate a server for the Proof Of Concept

We would want to dedicate a well configured server to running our Virtual Desktops. Be it a VMware, Citrix, or Microsoft solution, it will be in your best interest to see how well a server can run the desktops, give you some dedicated resources to running Virtual Desktops so you get an accurate view of the levels of performance you can provide for your user base, while centralizing those resources.

Dedicate some time for your IT staff to ‘play’ with the technology.

The IT staff is your most trusted resource internally for technology consumption. Give them the power to spend some time exploring the way this technology can change the way you support and deliver the desktop operating system to your users, as well as the applications they require to stay productive. As they will be the team that gets the first Virtual Desktops, ask them to use ONLY the VDI delivered desktop operating system to truly get a feel for the way this solution can work.

Define goals for the success of the Proof Of Concept

Why even do a Proof of Concept if there is no goal? You can make it as simple or complicated as you want, but I like to keep it simple. Is there any difference from running a tradition desktop PC? Does this impact the users’ productivity? What options do I have to address any issues that arise? How are we handling USB keys? Does the operating system & the applications perform as well as on a physical desktop? How invasive is a major desktop patch in the world of VDI for my organization?

Whatever you define as questions you need answered, make sure the team knows about these, has answers to them, and can provide you with a report (even if it is not a formal written report) regarding the measured criteria you set forth in your VDI Proof of Concept.

A Trusted Advisor can expedite a Proof of Concept

At the risk of including some sales sounding lingo here, I think this is an important item to include. A Partner (be it a consultant, reseller, or anyone who has experience in the solution) is an important resource to bring on board when considering a shift in the way you deliver desktops. They will bring best practices, past experiences, and can assist you in avoiding a long drawn out proof of concept. VDI itself is still relatively new, even though the use of a Virtualized Infrastructure is now an expected datacenter skill. If you are considering this as a solution for your IT organization, kick the tires a bit yourself, but when you want to design, scale, and build out a procedural change in the management of the desktop infrastructure, I find that I have been able to lead teams in the transition from tradition desktop management and empower the teams to take advantage of the benefits of having a VDI delivered desktop solution.